From filtering to extracting

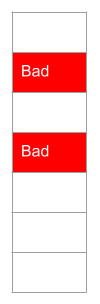

In the last lesson, you learned to filter data, marking errors and warnings.

This time, let's extract the good data, into a separate data set.

Then we can analyze the new data set, without worrying about bad data.

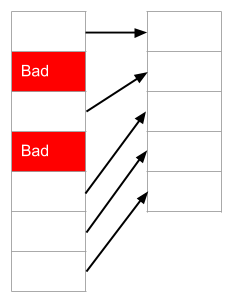

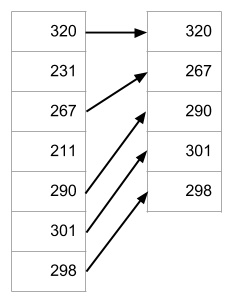

You can use the same method to analyze a subset of the data. For example, suppose we have sales data. The Big Boss wants an analysis of sales that are above $250. We'll go through the data set, copying sales above $250 to a new data set.

The new data set is a subset of the original.

Thunking

Why would you want to extract a separate data set, before processing it? Confession: There are no computations that you can do with the extracted high sales data, that you couldn't so with the original mixed sales data. You just add some If statements in with the processing code.

The reason you extract the high sales data has nothing to do with the data. It has to do with the code that processes that data.

Here's a program that uses a separate extraction step:

extractData dataOK

If Not dataOK Then

End

End If

processData

outputResultsNotice how simple the code is. extractData deals with all the messy data testing stuff. The code is isolated into that sub.

processData is a separate step. Hand it the data that has been extracted. The data is clean, so it only has to worry about it's own task.

You can test and debug extractData on its own, without having to think about the rest of the code. You can get it right, and then move on.

You can test and debug processData on its own, without having to think about the rest of the code. You can get it right, and then move on.

Separating the data first, before processing it, lets you write clean, elegant code. Code that's easy to test, debug, and change. Because the code is easier to test and debug, it will be more reliable. It will give management the right answers every time.

Mix data extraction, processing, and output together, and you get code that's harder for your brain. Harder to write, harder to debug. Harder to change when The Big Boss says, "Hey great! Now can you add…" Errors have an easier chance of slipping through.

It's all about the thunking. Thinking-in-chunks. Make a separate extractData chunk, and your code will be more reliable. That's how professionals think. Not just "Does the code run?" But "Does the code run? Is it reliable? Is it easy to change?"

Where to put extracted data

Question: Where does the extracted data go?

Answers:

- Into the workbook.

- Into an array.

Let's look at them both. We'll also see how data extraction looks with complete programs, so you can see good thunking at work.

Summary

In the last lesson, you learned to filter data, marking errors and warnings. Now let's extract the good data, into a separate data set.

Separating data before processing it lets you write clean, elegant code. Code that's easy to test, debug, and change. Because the code is easier to test and debug, it will be more reliable. It will give management the right answers every time.